Over the last few years, there has been an increase in development for Artificial Intelligence models, becoming more and more complex. Previously, models had an input, typically another layer, and an output layer, and dealt with discriminating simple tasks. Now, as we push the edge of innovation and science, we now have the ability for models to have several middle layers, or “hidden” layers. However, these do come with several drawbacks.

What is Deep Learning?

Before we can jump into the pros and cons, we need a set of definitions. By technicality, Deep Learning (DL) is a branch of Machine Learning (ML) which is under the umbrella term of Artificial Intelligence that has 7 different, but not exactly distinct categories. Each category in fact intertwines with each other in order to get the desired outcome. Deep Learning is a category in which a computer attempts to find a pattern within a set of data that a human could not find to sort out the information into the desired outputs.

In order to achieve such a result, computers use what is formerly called an artificial neural network: a method that resembles how the human brain functions using artificial neurons. An artificial neural network uses interconnected sets of these neurons to sort out information to correctly designate it to some value. There are, at minimum, 3 sets (layers) in the Neural Network:

1) Input Layer: This is where the input goes.

a) User-Input is 7.

2) Hidden Layers: This is the place where the input goes to get classified. For example,

number “7” has a “/” and a “-” on top. The hidden layer could ask,

b) Does this input have a “(,” “|,” “\,” or a “/”? It has a “?.”

c) Some of the options for a number with a “/” is having a “-,” “o” (the circle on top for the number 9), or “o” (for the circle on the bottom for 6). Seems like it has a “-”.

*Note: this example is for two hidden layers. There could be several hundred or even just one.

3) Output Layer: Like the name suggests, what is the value of the input?

d) It has a “/” and a “-,” therefore, it must be a “7.”

Humans first provide labeled training data in which the network learns some manner to differentiate between it. Humans then tell the computer whether it is right or wrong. This is called “supervised learning.” The other method is “unsupervised learning” where the computer is given unlabeled data and it must find patterns from it by itself.

However, the computers do not know the right steps for the network, so the networks undergo a process called “backpropagation.” Backpropagation is a step in which the computer uses what was to be the actual value and accordingly updates the weights and parameters to get the hoped output.

DL vs. ML

ML: Uses human intervention to tell the computer what/how to differentiate something. You use an explicit model to make/perform a task.

DL: Makes the computer find out the pattern within a set of data. Humans may necessarily not be able to recognize the patterns, but a computer could find it by itself with just the right amount of data.

Pros and Cons

Pros

1) Performance. Once trained for long enough, a neural network with the use of several GPUs and CPUs could do complex math equations that would take humans a long time.

2) Scaling. It can be scaled into taking in more inputs to process more and more.

3) Learning Capability. Automatically adapts to new things that aren’t in the dataset (e.g. a new word is given to it –> understand it and put it in its dataset). This is also known under the term supervised learning.

Cons

1) Data. Is really good with unstructured data (stuff that does not reside in some strict row by column way) but requires a ton of it.

2) Computational Power. More expensive to use. You need a high-level GPU in most cases because of the amount of cores it takes to run.

3) Training Time. It takes a ton of time to train it! Especially when you use more data + needs to be filtered out more in order to get a precise answer.

4) Overfitting. The computer’s ability to predict the correct output to its dataset gets so good that when new data is introduced, it becomes difficult to interpret the new data correctly.

Examples

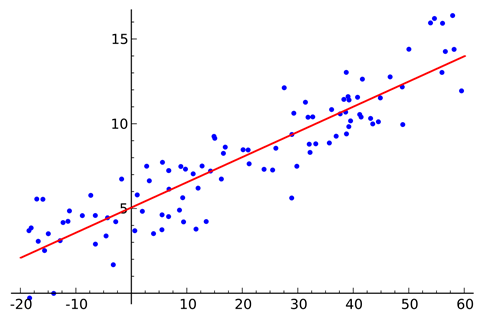

1) Linear Regression: Finding the line of best fit with multiple data points that may not be “strictly” correlated with each other. Here’s what I mean,

Credit: ActiveState

Credit: ActiveState

In our instance, we have two weights that can change in order to best fit this line.

We would change these (maybe make the intercept 3 and the slope 4) and see if they fit the line. If they don’t, you continue working on it until you can make it better.

Fun Fact: Rumor has it that ChatGPT 4 has around 1.76 trillion weights/parameters (the decoder). Still, just because you have a ton of weights doesn’t mean it’ll be good. This is where overfitting becomes an issue for models.

2) Natural Language Processing: In order to process and understand human content, Deep Learning Algorithms have to undergo rigorous testing in order to process, understand, and output an actual answer.

3) Medical Diagnostic Technologies: Giving computers the data of patients with and without a certain disease, could they identify it? In fact, they could, and found patterns that us humans could not find ourselves. For example, computers have used x-rays of patients rib cage area to conclude if they have COVID or not.

3) Computer Vision: Using DL Neural Networks, computers could learn how to “see” and identify things that we automatically see and understand. Car’s autopilot abilities are an example of this.